Converting a Html News Page to RSS

This have been my little weekend project this weekend (ok, some Christmas preparations to).

Background

As some of you know I play underwater rugby. The communication from the Swedish Underwater Rugby Association to it’s members is mainly through the news page on their site’s news page: http://www.ssdf.se/t3.aspx?p=51459 (in Swedish). This page is only exposing HTML and does not expose an rss-feed (they should have used SharePoint). My problem is that I never remember to visit the site with regular intervals, so I miss out on stuff.

Approach

As I truly am a RSS junkie, that’s what I wanted. To be able to get these news (together with all other news I’m interested in) in my feed reader. So the approach I took outlined:

- Get HTML from newspage

- Make sense out of and parse HTML

- Generate RSS XML and save to file

- Expose the RSS on my own web server

Doing all the parsing by hand didn’t sound very tempting so I Lived around a little and found the HtmlAgilityPack on CodePlex, which is a framework that let’s you query a HTML document in the same way you would query a XML document using XSLT or XPath. The release on codeplex was compiled against the 2.0 framework, I simply changed target framework for the project an recompiled, worked like a charm.

Let’s Get Going

Getting the HTML

The HtmlAgilityPack supports getting the HTML itself by using something like:

HtmlWeb hw = new HtmlWeb();

HtmlDocument newsDoc = hw.Load(url);The problem I had with that (and it’s probably due to incompetence on my part) is that I could not get the right encoding (very important in Swedish due to our extended alphabet). So what I ended up doing was getting the HTML myself and load it into a HtmlAgilityPack HtmlDocument object:

// Did not manage to solve the encoding bit so I retrive the data myself first ...

HttpWebRequest webRequest = (HttpWebRequest) WebRequest.Create(urlToFetch);

HttpWebResponse webResponse = (HttpWebResponse) webRequest.GetResponse();

// ... and then apply the encoding while reading in the stream into HtmlAgilityPack object

HtmlDocument htmlDocument = new HtmlDocument();

htmlDocument.Load(webResponse.GetResponseStream(), Encoding.Default);Parsing the HTML

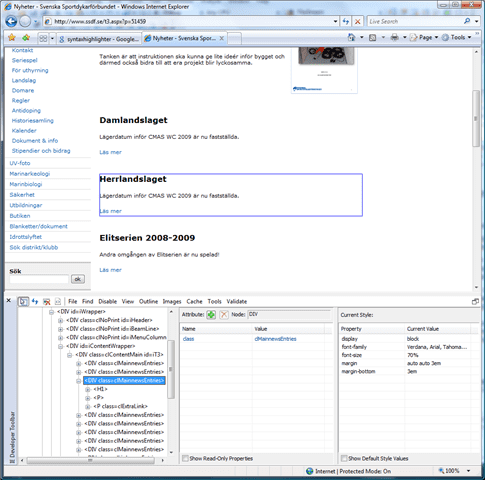

Now it’s time to leverage the power of the HtmlAgilityPack, but first I did a manual analysis of the HTML using View Source and the IE Developer Toolbar. I found that I could identify each a news item by looking for a DIV-tag with the class attribute set to clMainnewsEntries.

So, let’s get cracking and find those nodes:

HtmlNodeCollection htmlNodeCollection = htmlDocument.DocumentNode.SelectNodes("//div[@class='clMainnewsEntries']");

foreach (HtmlNode newsNode in htmlNodeCollection)

{

// ... generate rss items ...

}That was easy, now the HTML stars working against me. A few issues are

No Author

The news have no author, that I easily can get to programmatically. But it aint really important either so I’m just setting it to “N/A”.

No Links

Not all news have links and if they do it’s hard to tell if it’s a link to the news item or something else. So to fill the link-element in the rss I try to find a link that has title tag (which seems to be the way this cms system handles read more links.

string link = string.Empty;

if (newsNode.SelectSingleNode(".//a[@title!='']") != null)

{

link = newsNode.SelectSingleNode(".//a[@title!='']").Attributes["href"].Value;

if (!link.StartsWith("http"))

{

link = String.Format("{0}{1}", "http://www.ssdf.se/", link);

}

}No Publishing Date

This one is trickier. To add on the confusion I learned the editors update a news item when they want to push it to the top of the list. So what I do here is simply put the date and time when I retrieve it the first time, keeping track of them with a hash (see next paragraph). This should work fine when it runs with a steady intervall, tough the first time it will give all news the same date.

Guid

To keep track of the items I calcluate a hash for each item and store that in a separate XML file.

public string ComputeHash(string Value)

{

System.Security.Cryptography.MD5CryptoServiceProvider x =

new System.Security.Cryptography.MD5CryptoServiceProvider();

byte[] data = System.Text.Encoding.ASCII.GetBytes(Value);

data = x.ComputeHash(data);

string ret = "";

for (int i = 0; i < data.Length; i++)

{

ret += data[i].ToString("x2").ToLower();

}

return ret;

}I put this hash in the guid-tag of the RSS. So if the news is updated I hope they change something in it so it renders a different hash.

Building the RSS

It’s time to start building the RSS. I start creating the document using LinqToXml (which by the way is pure love to use and deserves a blog post all of it’s own):

// Creating XDocument

XDocument xDocument = new XDocument(

new XDeclaration("1.0", "windows-1252", "true"),

new XProcessingInstruction("xml-stylesheet", "type=\"text/xsl\" href=\"EvelntLog.xsl\"" ),

new XElement("rss", new XAttribute("version", "2.0"),

new XElement("channel",

new XElement("title", "UV-rugbynyheter"),

new XElement("link", HtmlDocument.HtmlEncode( "http://www.ssdf.se/t3.aspx?p=51459") ),

new XElement("description", "Undervattensrugbynyheter från SSDF"),

new XElement("language", "sv-se")

)

)

);And then I add each item to item to the feed:

root.Add(new XElement("item", "",

new XElement("title", newsNode.SelectSingleNode("h1").InnerHtml),

new XElement("description", newsNode.OuterHtml),

new XElement("link", link),

new XElement("author", "N/A"),

new XElement("pubDate", pubDate.ToString("r")),

new XElement("guid", postHash)

));Finalizing

I put this little program on my web server and used the windows scheduler to run it every 2 hours. And the final piece of code pushes the generated file out to the right directory.

if(Convert.ToBoolean(ConfigurationManager.AppSettings["CopyFile"]))

{

File.Copy(".\\" + ConfigurationManager.AppSettings["Filename"],

Path.Combine(ConfigurationManager.AppSettings["TargetDir"],

ConfigurationManager.AppSettings["Filename"]), true);

}You can grab the source code for the first working version here. Now it’s refactor time!

Tags: .NET Development, Blogging, Computing, Productivity